After starting out on its own, DALL-E 3, the image-generating AI, is now integrated into the Bing search engine and accessible via Microsoft’s free web interface or the Copilot application available on smartphones.

As with the other artificial intelligences tested on this site, our guiding principle is to produce a photo-realistic studio portrait that’s as “human” as possible.

For this test, we won’t be talking about a virtual photo shoot, as the web interface doesn’t allow you to create variations from a chosen image.

OpenAi, the artificial intelligence that best understands our needs

OpenAi has been the most popular artificial reasoning company since the end of 2022, thanks to the advent of its ChatGPT artificial intelligence.

DALLE-E 3 is the text-to-image version of the AI.

DALL-E in color

We start with the same prompt as our Adobe Firefly test:

“Photo shoot in a small photo studio with two light boxes. The model is a Eurasian woman. The studio background is rough concrete.“

A single image is offered, with a model seen from the back and a camera on a very poorly oriented stand.

The scene is unexpected, but the rendering of the light is quite stunning.

The quality is there: the foreground is sharp, the background blurred, the hair is well done, as is the fabric of the dress.

On the other hand, this first model generated by DALL-E 3 is extremely thin, with an exaggeratedly slim waist.

To tighten the framing, we add the term “portrait close-up” to our prompt.

Unlike Adobe and Stable Diffusion’s AIs, OpenAi’s AI immediately produces tighter framing by cutting off the top of the face.

These first three results are of very good quality, but for us they come too close to hyperrealistic illustrations.

To try and increase the realism, we add 3 indications to our prompt:

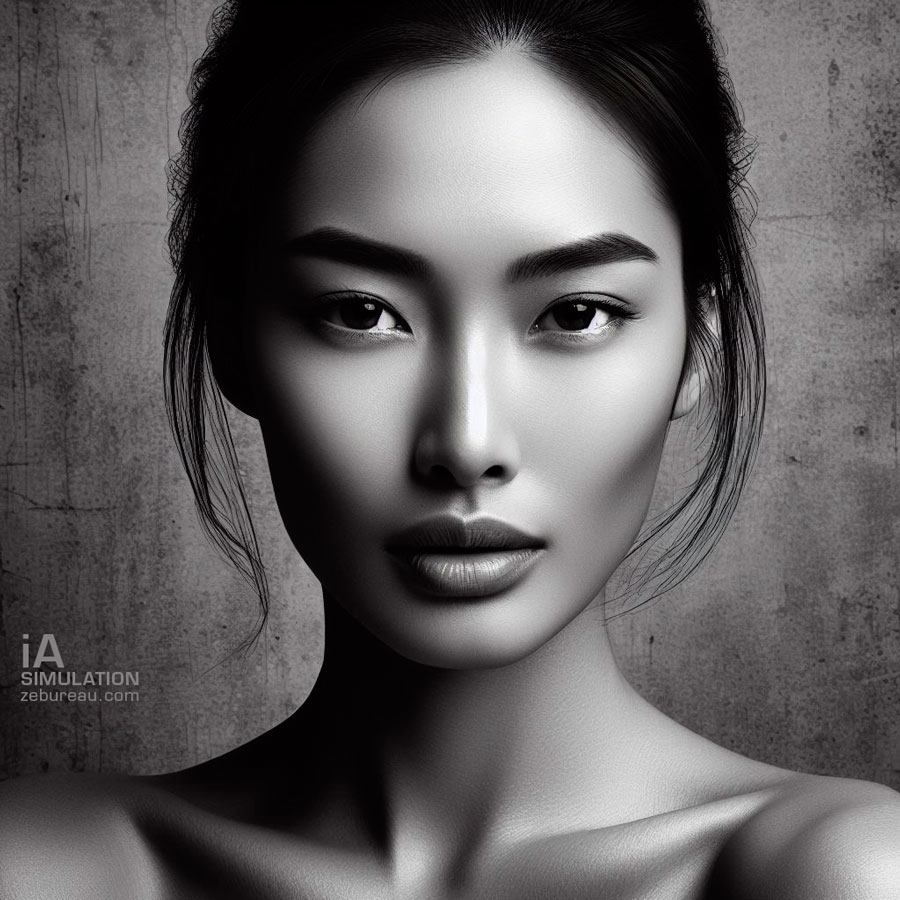

“Professional studio photo shoot with 2 light sources and photo-realistic rendering. Close-up portrait of an Asian woman. Studio backdrop is rough concrete.”

The quality of hair rendering and even studio lighting is stunning, but skin texture seems to be absent.

The proposed poses are coherent, but the images are still very stylized and unnatural.

The studio lighting rendering is interesting and reminiscent of the results we obtained with set.a.light, a 3D lighting simulation software for photo studios (Article coming soon).

It’s amusing to note that the neck section in the foreground of the large image is octagonal!

As in our previous Firefly beta AI test, we then ask for a “Harcourt studio”-style rendering, but with no noticeable results, nor any black-and-white image generation.

DALL-E in black and white

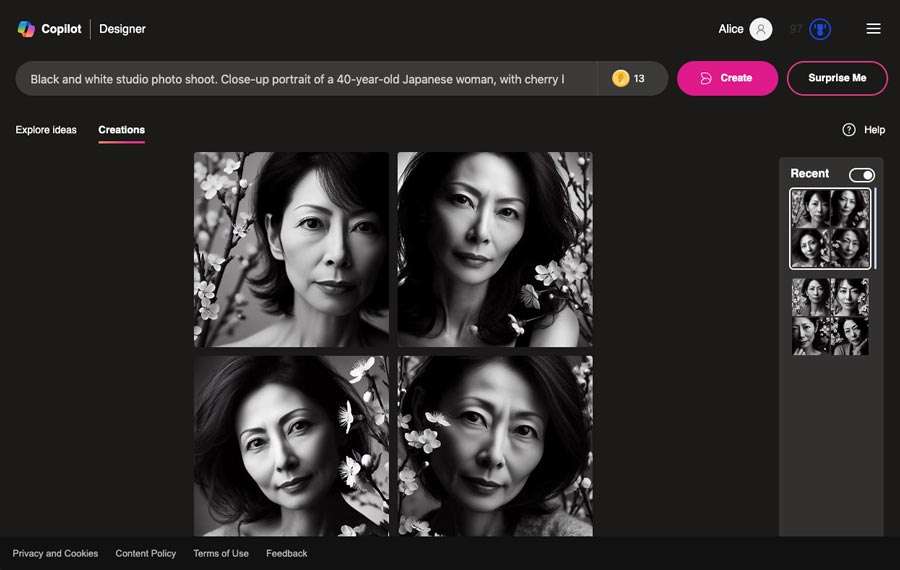

To refocus on the studio portrait, we remove the lighting indication so that lightboxes no longer appear in the images, and instruct the AI to switch to black and white.

What can I say, except that the results are quite breathtaking if you love plastic, and that OpenAi’s AI has obviously discovered some of the secrets of cold beauties!

Renderings are close to MidJourney, the benchmark AI, and are more sophisticated than with Adobe’s Firefly (but much less natural).

We can’t stop there, unless we want to promote anorexic models…

What we’re aiming for, as in our studio portraits, is a little more realism with a few of humanity’s inherent flaws…

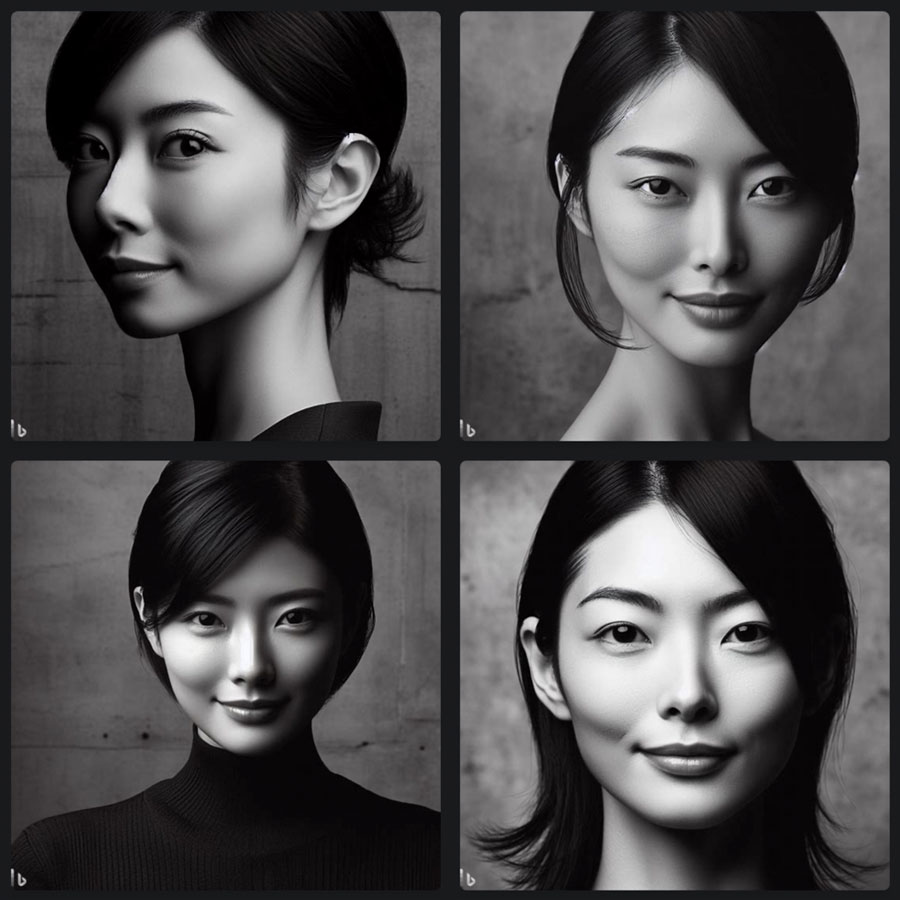

At this point, with our virtual models taking themselves too seriously, we decide to add a “half-smile” to our prompt.

Aside from getting beautiful wax dolls, we seem to be going round in circles in a DALL-E that’s too square.

More than perfect artificial intelligence?

The DALL-E 3 image generator is starting to show us its design biases by offering very skinny female models with extremely thin necks and often very protruding bones.

In all our tests of image-generating AIs, we’ve never used qualifiers like beautiful, attractive or slim, yet OpenAi’s artificial intelligence offered only its “beauty canons” with little diversity, unlike the other AIs tested, whether Midjourney, Firefly or Stable diffusion.

We can legitimately worry about the models on which this type of Artificial Intelligence is trained, and the stereotypes they can spread en masse across the world on social networks like Instagram or Tik Tok, at the risk of damaging the mental health of girls and boys who will seek to resemble them.

Ouch, splash in the AI.

Faced with the overly perfect results of DALL-E 3, we carefully “ugly up” our models by adding to our prompt:

“more Japanese face, a little less perfect, with a pagoda necklace”.

But artificial intelligence inexplicably rejects our request!

In search of realism

Since the unfair censorship we’ve just suffered, we’re a little hesitant about the next words to use to de-plastify the AI rendering.

Why not simply add an age criterion to our models?

With this very dashing “40-year-old” model, the AI begins to slightly streak the skin (in an unnatural way if zoomed in) and add micro-wrinkles and a few blemishes that add a little more realism.

Finally, we obtain several more satisfactory results in photo rendering, enabling us to select an image to illustrate this article.

Studio portraiture with DALL-E 3 versus Midjourney, Stable Diffusion and Adobe Firefly

Image rendering

Between the free versions of the “text-to-image” AIs tested for this blog and the (mandatory) paid version of Midjourney, the results obtained at image level have very different styles.

In terms of portrait rendering, DALL-E 3 is still no match for the photorealism of Midjourney 5.2 or Stable Diffusion 1.5.

Firefly, on the other hand, produces fairly natural images, albeit with a few shortcomings.

DALLE-E 3, comme Firefly ont un peu de mal à rendre le grain de la peau quand Stable Diffusion et surtout Midjourney apparaissent un cran au dessus.

DALL-E 3’s ability to understand

During our first test with Adobe Firefly Beta, we were forced to simplify our requests, as this artificial intelligence, still in beta version, was not capable of understanding everything, nor of convincingly generating complex images. We reused these simplified prompts for the other AIs.

Both the test with Stable Diffusion 1.5 and the test with Midjourney were disappointing, as these AIs only interpreted a small part of the prompts’ content.

DALL-E 3, thanks to the experience acquired by ChatGPT, is the most efficient in this respect, and generates far fewer errors than its three competitors.

For example, DALL-E 3 was able to create the requested pagoda-shaped pendants, while Midjourney 5.2, Stable Diffusion 1.5 and Firefly beta were unable to do so!

The creation of backgrounds with cherry blossoms proved to be understood by all 4 AIs.

Respecting the prompt

We test DALL-E 3’s comprehension capabilities with a more detailed prompt featuring a photographer:

“Shooting in a photo studio. A photographer takes a black-and-white photo of a half-smiling 35-year-old Japanese woman wearing a cherry-tree patterned dress. Two octagonal light boxes, one large and one small, illuminate the model from either side. The back of the studio is made of rough concrete.

What’s interesting is that our requests are pretty well understood, even if there are a few free interpretations of the AI, such as for the lightboxes that are sometimes placed behind the model and where unspecified characters wander around in the background.

Images are generated fairly faithfully and with very few misfires: the result is far superior to images generated by Firefly with an equivalent prompt, and even to images produced during our Midjourney 5.2 test.

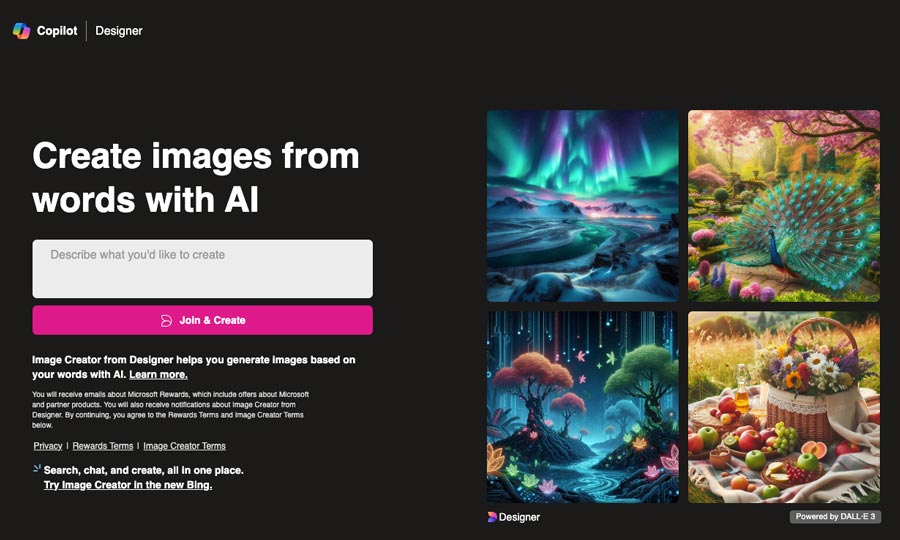

Microsoft’s web interface for DALL-E 3

Microsoft offers several different interfaces using the DALL-E 3 image generator, as well as a dedicated application called “Copilot”.

Using the bing.com search engine, click on the “Create” button to access the DALL-E interface shown below.

You can enter a prompt in the first form, but Microsoft requires you to have an account and log in to benefit from the image generator.

Once logged in, the interface offers only a single-line input field (impractical for checking your full prompt) and a “Create” button for generating images.

A small number to the right of the search field indicates the number of requests that can be generated without waiting.

Once this credit has been used up, you may have to wait several minutes to generate a new request.

The number of images created per request is random and can range from 1 to 4.

Clicking on one of the resulting images takes you to its original 1024-pixel version for downloading.

On the right-hand side of the screen, you can access the history in the form of small thumbnails.

Finally, the “Surprise me” button is of no use other than to generate an image that has nothing to do with the current series…