Accessible free of charge on the Internet with a simple Adobe account, we spent two and a half hours in Firefly’s company and tried our hand at a virtual portrait shoot.

Based on a fairly simple “prompt” (description of the desired image), Adobe’s AI was asked to generate multiple versions of portraits.

Firefly beta is still in its infancy, so it quickly reaches its limits when it comes to the subject at hand: studio portrait photography.

The AI doesn’t yet offer (and this is probably not its aim) all the possibilities of setting up lighting and rendering as precisely as with the 3D studio shooting simulation application (Article coming soon) we tested for this blog.

An AI quick to draw its images

Once logged into Firefly’s web interface, somewhat hidden in the sub-menus of Adobe’s website, you are invited to describe the first image you want, in your preferred language.

Our virtual portrait session begins with this prompt:

“Photo shoot in a small photo studio with two lightboxes. The model is a Eurasian woman. The studio backdrop is rough concrete.”

Image generation is rapid, but the initial results are rather worrying.

There are major scaling problems, and some positions are a little surprising, such as this female model balancing on her knees, or the image of a man (?) accompanied by a woman who raises her leg for no reason!

Aïe ou Ai, it’s time to choose

In addition to the above oddities, the term Eurasian doesn’t seem to be too obvious to the Firefly AI.

We then try other more precise prompts for lighting positioning, but Firefly is in the doldrums and less advanced than the DALL-E 3 AI, which is the best at assimilating a multitude of information.

We’ve gone back to much simpler descriptions and, above all, abandoned our original idea of showing an entire scene in a photo studio.

By removing the lightboxes from our prompt and adding the term “close-up”, we’re hoping that Adobe’s AI will come up with something more coherent.

The result is a series of fairly natural, realistic portraits in a variety of postures.

Please note: True “close-up” in portraiture is normally a tighter framing cutting across the face, but Adobe’s Firefly AI, which we’d imagine to be more proficient on the subject, doesn’t seem to have the same definition as we do.

What do you understand, Adobe Firefly?

We decide to abandon color and continue this virtual shoot in black and white.

Thinking it would save time to determine an image style, we specify “Harcourt-style portrait”, but like the other AIs tested in this blog, Adobe is unfamiliar with the famous French studio, famous since the 1950s for its star portraits and Fresnel lens-based lighting.

The AI continues to offer us color images with models who don’t all have Asian features… so we’re dropping the term Eurasian.

With “Contrasting black-and-white studio portrait of a woman with Asian features, with a halo of light in the background” we obtain halo styles, none of which are remotely reminiscent of the Harcourt style.

It takes many attempts at wording to find terms that are known or interpretable by Adobe’s AI. We’re a little frustrated.

We’re dropping part of our ambition for portrait control and will simply wait for Adobe Firefly to come up with an acceptable basis to decline.

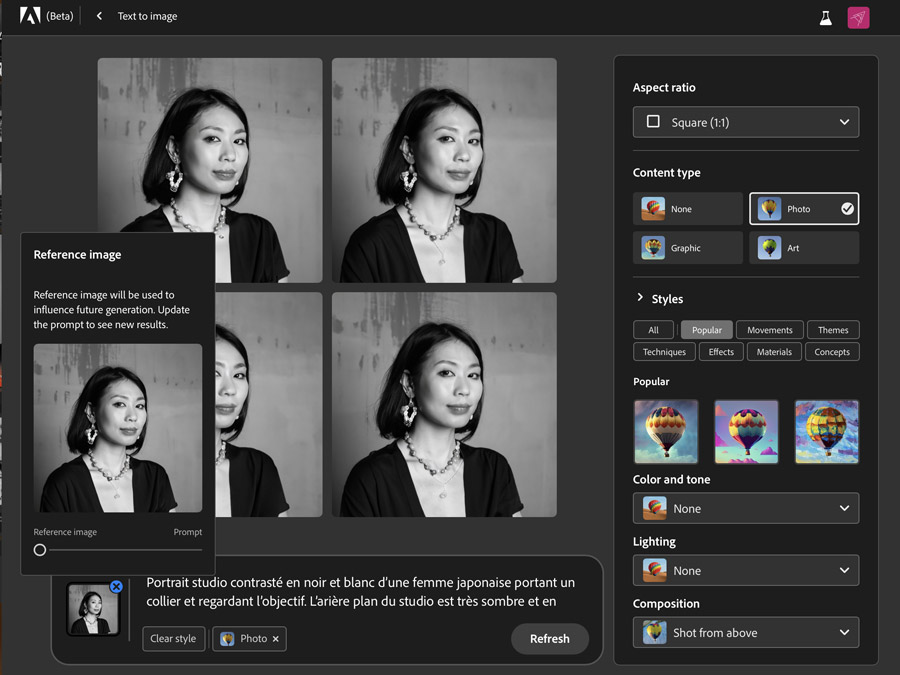

As the term “Asian woman” again produced unconvincing results, our prompt becomes: “Contrasting black-and-white studio portrait of a Japanese woman looking at the lens. The studio background is rough concrete.“

This time, all the initial results were in line with our simplified request.

We then decide to choose a reference image to generate several variations of the portrait.

The one we choose is accessorized “… with a pagoda-shaped necklace“, but I’m telling you right now, as far as the jewelry is concerned, we’ll never get the pagoda-shaped pendant we wanted, not to mention the earrings we didn’t ask for!

AIs with varying degrees of comprehension.

Adobe is a long way behind ChatGPT, the umbrella for the DALL-E image generator we tested via Microsoft’s interface, but Firefly is still above the level of understanding of the Stable Diffusion 1.5 AI tested for this blog.

Only DALL-E 3 was able to interpret the majority of our requests, such as creating splendid pagoda-shaped pendants.

Firefly’s portrait in black and white

While the first portrait selected is fairly realistic, it does have a number of small rendering flaws (as with many other portraits) around the nose, lips and ears.

Wouldn’t the very pronounced wrinkle on the neck be a sign that AI-generated heads can be replaced?

Our first overall impression is mixed, and the term “beta”, which accompanies this version of Firefly, seems to take on its full meaning in view of the interpretation difficulties encountered by the AI.

But as with most emerging artificial intelligences, you have to be able to talk to them and get down to their level of understanding.

In a way, this is reassuring, since a certain amount of human intelligence is still needed to make the best use of these tools.

The idiocracy that seems to threaten us all can wait a few more years!

The Adobe Firefly AI web interface

The Internet version of adobe’s Firefly interface takes just a few minutes to learn.

On the left, enter your prompt and generate 4 images. You can repeat the image generation process several times until you find an interesting image to use as a starting point.

You then select your reference image, from which you can generate new variants that will be more or less similar: the adjustment is made by means of a small cursor that appears when you click on the saved image to the left of the prompt.

You can also modify the prompt without changing its reference image, but in our case, it seems that these modifications were not always properly taken into account.

On the right-hand side of the screen, you can choose the image format, type, color, lighting and viewing angle, or enter this information directly into the prompt. Finally, a “Styles” section offers different types of rendering.

When we mistakenly closed our reference thumbnail, we lost our model because there’s no real history. If you can go back, since you’re in a web browser, it’s not necessarily enough to easily find a reference image. That’s why we didn’t finish our virtual shoot with the same face.

One of the most realistic images produced during our session.

Firefly beta AI and background scenery

Adobe Firefly highlights its ability to modify or replace backgrounds.

Integrated into the latest versions of Photoshop, AI makes it easy to add spectacular elements to natural settings.

What about our virtual portrait indoors?

As part of our studio portrait simulation, we carried out some simple integration tests, just like the images we usually produce in our studio photo lyonnais.

Our prompt is updated to add this background change to the portrait: “… The studio background is very dark with shadows of leaves“.

For facial expression, we now ask the AI to display a half-smile.

The proposals are varied, and some are interesting and fairly realistic, even if the photo rendering is sometimes a little “plastic”.

Finally, it was decided to completely “Japanize” the portraits, replacing the shadows with cherry blossoms, including for the dress motifs.

After 2h30 of “virtual shooting” with Adobe’s Firefly AI, we stopped at this last face, whose composition seems acceptable to us, even if a few inconsistencies remain, particularly in the upper lip.

Artificial intelligence, the way to discard photographers?

Is 2023 the start of a new era in which image-makers will no longer have to travel the globe?

Or advertising agencies will no longer have to pay models for their campaigns?

Or photographers will no longer need to find sets for shoots?

If what awaits certain photographers who create artistic views seems rather exciting, the entire photography sector, already in difficulty since the advent of image banks, then partly “uberized” by intermediary websites that underemploy many photographers, is going to lose a new facet of its business.

Artificial intelligence, by shamelessly pumping out billions of images from photographers, has already built up a considerable database that will, in the future, replace the vast majority of us.

While Adobe prides itself on using legal (but unpaid) content as a medium, namely the catalog of thousands of photographers who have entrusted them with their images one day… other AIs have been able to “fish” for images on the web at will, such as probably the most talented of the text-to-image AIs, Midjourney.

Once an image has been generated by an AI, it is in any case almost impossible to know from which sources it was created. Lawsuits are starting to rain down in the United States, but AI algorithms have the ability to avoid plagiarism by easily circumventing copyright.

The day is approaching when we’ll all be able to simply scan our face with our cell phone and then create a multitude of adjustable portraits using prompts, with a wide variety of expressions and backgrounds.

While we wait for the end of photo studios, read on in this blog our “real” stories of rocker portrait sessions, EVJF studio portraits, intimate portrait sessions, or creative portrait experiments using video projection (Articles coming soon).